Obstacle avoidance

NOA stands for Navigation, Obstacles and AI. It’s the first product of biped. NOA works like a small self-driving car on your shoulders. It uses advanced AI to identify potential obstacles, give GPS instructions and describe your surroundings. Obstacle avoidance technologies typically generate overwhelming feedback for end-users, even in simple scenarios. Here is how NOA is changing that. To learn more about all the features, read our blog.

Object finding

AI scene description

Holes detection

Other smart features

World-first partnership x Honda Research Institute

biped's team partnered with world-class self-driving experts from Honda

Research Institute (HRI) to build NOA.

Just like self-driving cars, NOA can identify obstacles, track them and predict

if there will be a risk of collision, a couple of seconds ahead.

This limits drastically the amount of feedback generated by NOA, compared with other solutions on the market.

Link to the announcement

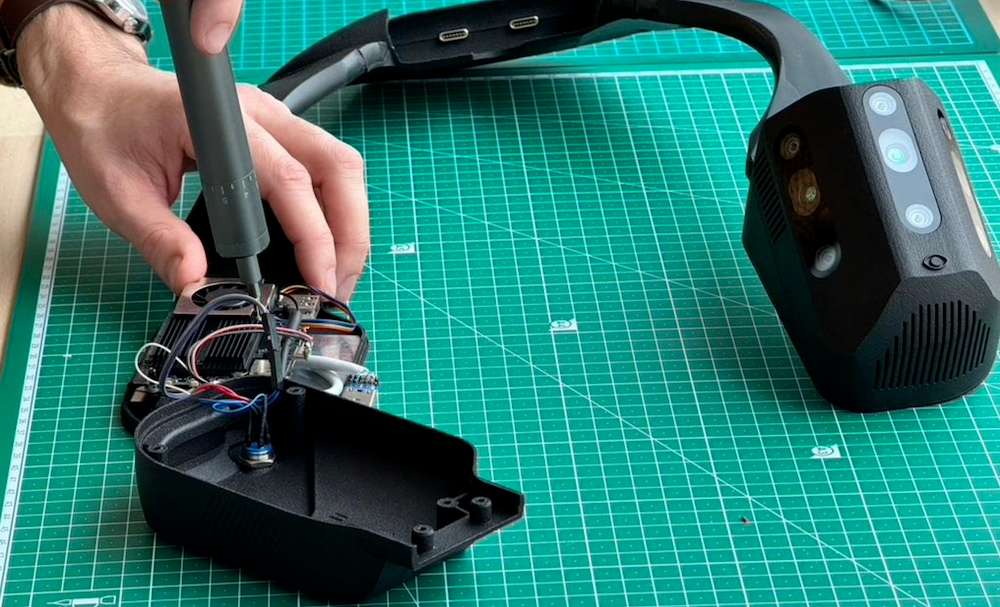

High precision engineering Swiss Made

biped is an EPFL startup, one of Switzerland’s leading engineering institutes. Our devices are assembled and tested by hand, at the heart of Europe.

Intuitive audio feedback

NOA's feedback is science-backed. We worked with leading scientists in low vision and neuroscientists, to define the optimal feedback through bone-conduction headphones. Obstacle sounds are based on 3D beeps, and reacts in less than 100ms. Obstacle elevation is reflected with the pitch of the sound. NOA becomes your companion, as if someone was by your side, helping you move freely. NOA will not create a feeling of dependency, but instead focuses on improving your mobility skills even when you don't use it.

From 1 to 100+

For most startups, going from 1 prototype to hundreds of units is a massive challenge. The developement of a hardware product can take years. We have of course been through that valley, experienced long delays, iterated on the product dozens of times, before reaching a stable version: NOA. This device is now stable, scalable, and produced in growing volumes.

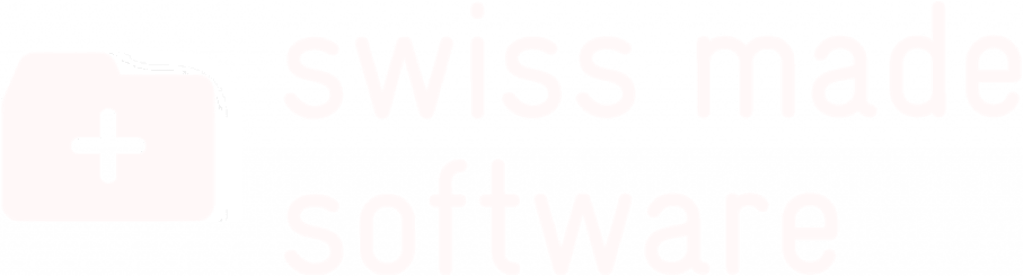

2021: First prototype

We started biped out of a Hackathon, a 1 week challenge to create an impactful solution. biped started as a belt that can describe surrounding objects. When we introduced the first prototype to end-users & O&Ms, we quickly realized that the field of view needed to be higher to allow space for white canes and guide dogs in front. We tested our software extensively with that version and added night vision for users struggling with low light. We focused on developing a new version that would sit on the chest and be easier to mount.

2022: First vest

Early 2022, we developed our first vest. We added night vision to our system and reduced a lot sensory overload by only focusing on obstacles that have a real risk of collision. At that time, NOA was still only an obstacle detector. We organized many user testing sessions in standardized environments for the vest and came to these conclusions: the vest needed to be clipped on the chest, but it was still challenging for users to put on their device alone. The field of view was also too limited on the ground-level and on the side for lateral obstacles. Back in the lab!

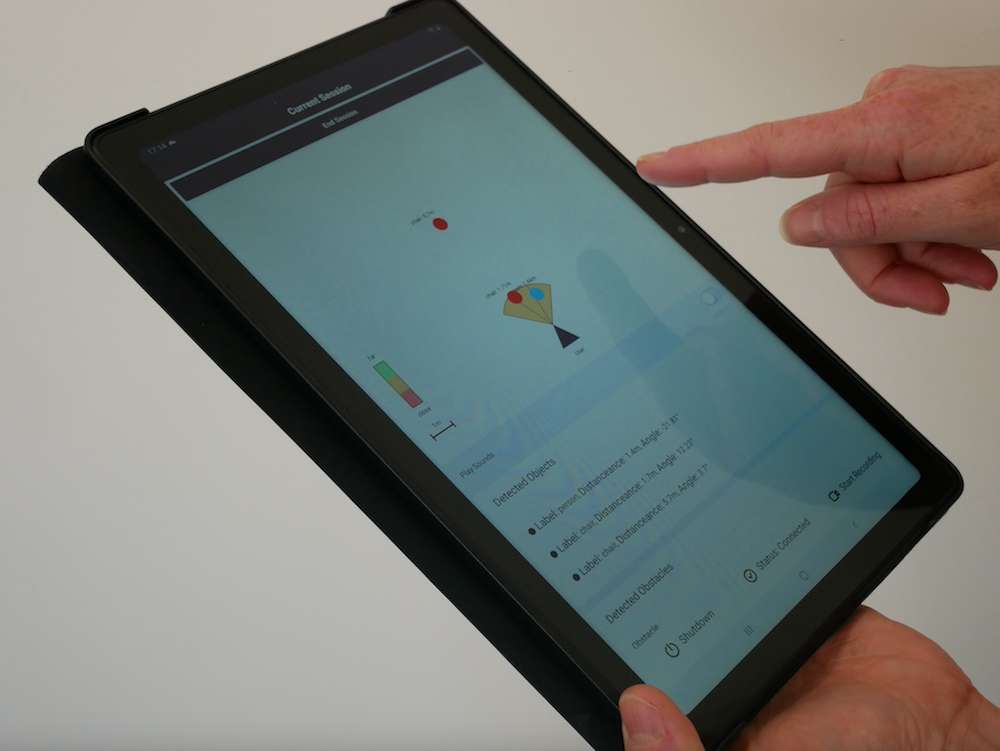

Late 2022: First O&M tools

O&M trainers during test sessions highlighted that it was challenging to train users without knowing what the device percieves from the environment. So we focused on building a smartphone app capable of streaming what the device perceives from the surroundings, in real-time. This tool was put in the hands of O&M trainers from day 1 to get feedback on the information to present.

2023: Doing more

As our obstacle avoidance technology started to reach maturity, we realized that our technology needed to do more than avoiding obstacles. We followed O&M trainers during white cane session, we joined guide dog training sessions and converged to the following idea: We need NOA to give accurate GPS instructions that respect O&M rules, and most importantly, to be able to guide the user to a specific element such as crosswalks, stairs, doors & exits, just like guide dogs would do. NOA now had the ambition to become a real all-in-one mobility solution.

2024: Buttons

In 2023, NOA was tested with over 200 end-users, and used on a daily basis by dozens of beta-testers. As NOA grew in complexity, the smartphone app was needed more and more to change settings, control the device, etc. We saw many requests to make the device work without a smartphone app. For our first commercial product, we focused on adding these buttons, designed the layout with end-users, mapped the features with O&M trainers, and quickly got awesome feedback. To learn more about our tech, read our blog.

Over 2500 km walked with NOA! Built with over 250 beta-testers.

Made for a daily use

Our team

We’re a small but extremely efficient team of robotics engineers, full stack engineers and AI experts based in Switzerland. Our startup, biped robotics, was created early 2021. At the time, Mael, CEO, met a white cane user doing a FaceTime to a friend. His friend was telling him when to turn left or right, where obstacles were, and how to find the door to the building: navigation, obstacle avoidance and image description. Just like NOA! Our team has worked since the start with leading institutions such as the ophthalmic hospital in Lausanne.